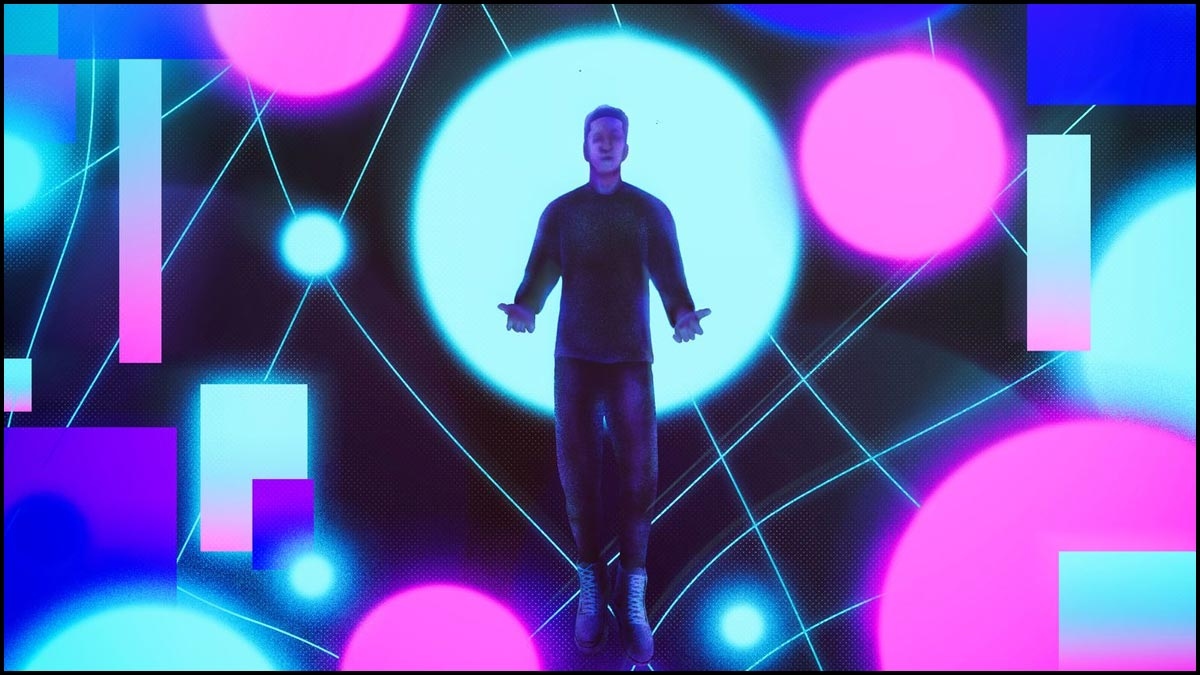

Digital Replicas vs. Performers: The No Fakes Act Steps In

Send us your feedback to audioarticles@vaarta.com

A bipartisan bill would protect actors, musicians, and artists from illicit digital replication of their appearances and voices.

The "Nurture Originals, Foster Art, and Keep Entertainment Safe Act of 2023," or "No Fakes Act," aims to harmonize likeness, name, and voice use laws. The bill, co-sponsored by Senators Chris Coons (D-DE), Marsha Blackburn (R-TN), Amy Klobuchar (D-MN), and Thom Tillis (R-NC), prohibits digital reproductions without consent. News, public affairs, sports, documentaries, and biographies are exceptions. These rights last 70 years after death.

Parodies, satire, criticism, and commercial activity for news, documentaries, and parodies are allowed under the proposed law. These provisions allow individuals and institutions like estates and record labels to file civil proceedings. The bill emphasizes that labelling a digital replica as illegal is not enough.

Similarity regulations vary by state, but the No Fakes Act standardizes them. New York is one of the only states that permits digital reproductions of deceased people in scripted or live performances.

Likeness laws have become more relevant due to generative AI techniques that replicate voices or generate celebrity images. Concerns include unlawful AI-generated songs using artists' voices. Fragmented likeness laws make protecting artists' publicity rights between states difficult.

Hollywood studios' interest in actor digital scans exacerbated AI duplicate issues. The RIAA and Human Artistry Campaign support the bill to safeguard intellectual property.

Critics say the No Fakes Act rebrands existing laws. They worry that the bill may complicate intellectual property law by adding no further safeguards beyond copyright and publicity regulations.

The No Fakes Act is a positive step toward preventing digital copying, but its impact on intellectual property law is concerning. AI regulation must be balanced with IP rights by lawmakers.

Follow us on Google News and stay updated with the latest!

Comments

- logoutLogout

-

Bala Vignesh

Contact at support@indiaglitz.com

Follow

Follow

-a3e.jpg)

-3c4.jpg)

-e5c.jpg)

-e66.jpg)

-71b.jpg)